OCTOBER 2024

CAN AI LITERACY PROMOTE A MORE RESPONSIBLE FUTURE?

SUMMARY.

This question was the catalyst for my empirical study in my master’s thesis. If we aim for a future where humans and AI coexist efficiently and respectfully, then understanding AI could be a crucial first step. Motivated by this idea, I designed a pilot study featuring a customised AI literacy module tailored for non-STEM university students, gathering insights into their perspectives and evaluating their performance. In this article, I will summarise the key points and the findings that emerged from this study.

AI LITERACY: DEFINITION.

AI literacy extends beyond basic technological skills, fostering a more nuanced understanding of AI’s role in decision-making, along with associated risks such as privacy concerns, algorithmic bias, and potential impacts on employment (Long and Magerko, 2020).

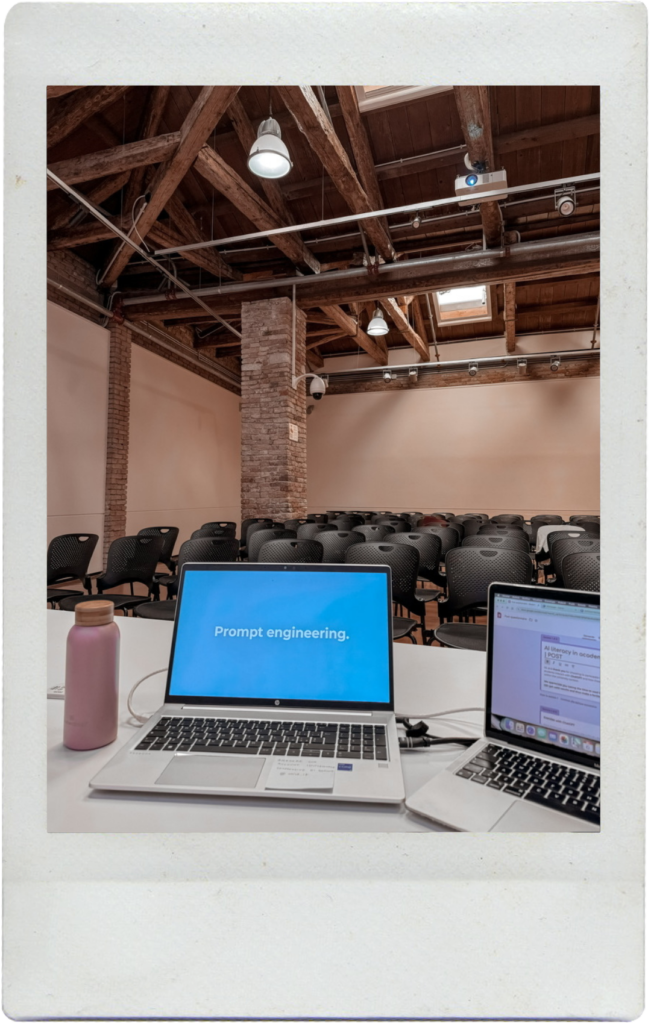

Developing AI literacy equips individuals to engage with AI responsibly, enabling them to make informed, ethical choices in both personal and professional spheres (UNESCO, 2021). Importantly, AI literacy isn’t reserved solely for experts; it should encompass competencies for non-technical users as well, such as the ability to interact with AI systems through natural language. For instance, “prompt engineering”— the ability of crafting effective prompts to elicit meaningful responses from AI — has become essential for maximising productive AI interactions (Haugsbaken and Hagelia, 2024).

Despite increased awareness, ethical literacy surrounding AI remains underrepresented in education and training programmes. UNESCO’s AI ethics framework, for instance, advocates for greater public awareness and the integration of AI literacy within educational curricula. In the workplace, AI literacy is also proving crucial, empowering employees to engage confidently within AI-driven environments and to apply ethical principles in their roles (Cetindamar et al., 2022).

The need for flexible, comprehensive AI literacy highlights the rapid technological changes outpacing the development of conceptual, ethical, and educational frameworks, underscoring a societal shift towards continuous, personalised learning as AI continues to reshape our world.

PILOT STUDY: METHODOLOGIES.

PILOT STUDY: METHODOLOGIES.

The pilot study assessed the impact of an AI Literacy (AIL) workshop focusing on generative AI and large language models (LLMs), specifically ChatGPT, conducted with humanities students from Ca’ Foscari University of Venice.

The three-and-a-half-hour workshop included theoretical and practical segments, covering AI history and approaches, legal and ethical constraints, and prompt engineering, and was held with approval from the University Ethics Committee. Privacy measures included anonymised data collection via Google Forms and guidance on deactivating data sharing in ChatGPT.

Participants completed pre- and post-workshop questionnaires, designed on a five-point Likert scale, which assessed prior AI knowledge, perceived threat (Theophilou et al., 2023), emotions, interaction quality (Skjuve, Følstad, and Brandtzaeg, 2023), and ethical awareness. Quantitative analysis of the questionnaire responses was conducted using the Wilcoxon signed-rank test to assess shifts in students’ attitudes and knowledge post-workshop.

Additionally, a prompt engineering exercise required students to generate a motivational letter using specific instructions, and it was repeated both before and after learning targeted prompt engineering patterns and techniques. Prompts and outputs were evaluated qualitatively and quantitatively, focusing on aspects such as clarity, adaptability (Lo, 2023), and adherence to specified requirements.

The study allowed for preliminary insights, with plans to expand and refine the study for broader applicability.

PILOT STUDY: RESULTS.

Participants began the workshop with limited knowledge of AI, but their understanding and practical skills improved significantly by the end.

Students reported feeling less threatened by AI and expressed increased confidence in using ChatGPT for academic work, noting higher satisfaction with its comprehension and responsiveness.

Interactions with AI tools were predominantly positive, reflecting an enthusiasm for incorporating generative AI into learning experiences.

The workshop also raised participants’ awareness of biases in large language models (LLMs) and underscored the importance of ethical considerations in AI use.

Prompt quality, assessed via the CLEAR framework, showed notable improvements, with students crafting clearer, more explicit prompts that led to more accurate and creative AI-generated outputs.

Limitations of the study included a small sample size and self-selection bias, indicating that a larger, more diverse study could help confirm and expand these findings.

PILOT STUDY: DO WE NEED AI LITERACY?

In summary, my thesis examined the significance of AI literacy, particularly focusing on generative AI tools like ChatGPT, as a means to equip non-STEM students with essential critical, practical, and ethical skills.

The research combined a theoretical exploration of AI’s development, legal and ethical challenges, and prompt engineering with an empirical study conducted among humanities students at Ca’ Foscari University.

The study revealed notable improvements in students’ abilities in prompt engineering and ethical awareness, underscoring the role of AI literacy in deepening their understanding of AI’s limitations and inherent biases.

These findings highlight the pressing need for integrated AI literacy programmes in educational curricula to promote responsible and informed engagement with AI.

Ultimately, this work underscores that true progress lies in adopting a human-centred approach to AI, ensuring that technology serves to enhance, rather than overshadow, human judgement and values.

REFERENCES.

Cetindamar, D., Kitto, K., Wu, M., Zhang, Y., Abedin, B., & Knight, S. (2022). Explicating AI literacy of employees at digital workplaces. IEEE transactions on engineering management, 71, 810-823.

Haugsbaken, H., & Hagelia, M. (2024, April). A New AI Literacy For The Algorithmic Age: Prompt Engineering Or Eductional Promptization?. In 2024 4th International Conference on Applied Artificial Intelligence (ICAPAI) (pp. 1-8). IEEE.

Lo, L. S. (2023). The CLEAR path: A framework for enhancing information literacy through prompt engineering. The Journal of Academic Librarianship, 49(4), 102720.

Long, D., & Magerko, B. (2020, April). What is AI literacy? Competencies and design considerations. In Proceedings of the 2020 CHI conference on human factors in computing systems (pp. 1-16).

Skjuve, M., Følstad, A., & Brandtzaeg, P. B. (2023, July). The user experience of ChatGPT: findings from a questionnaire study of early users. In Proceedings of the 5th international conference on conversational user interfaces (pp. 1-10).

Theophilou, E., Koyutürk, C., Yavari, M., Bursic, S., Donabauer, G., Telari, A., … & Ognibene, D. (2023, November). Learning to prompt in the classroom to understand AI limits: a pilot study. In International Conference of the Italian Association for Artificial Intelligence (pp. 481-496). Cham: Springer Nature Switzerland.

UNESCO (2021). Recommendation on the ethics of artificial intelligence.